Creating an MVP for an unofficial v0.dev VS Code Extension clone

The race to generate React UI code in VSCode ensues.

This extension is in no way affiliated with v0.dev or Vercel. It's just a dummy pet project we made to test out code generation in VSCode. We do aim on pursuing similar goals, but definitely with a different name 🥸

Before we started working on this extension, another team with Grayhat was already working on an earlier version of the idea. The initial approach was to create a simple website embedded within VS Code using an iframe. v0.dev served as the core interface where users could input prompts and receive generated React component code. However, the iframe approach quickly ran into issues, as the website was eventually blocked by various security measures within VS Code. This made us rethink our strategy and led to the decision to build a native VS Code extension without relying on any website outside of VS Code.

Planning

To streamline things, we started with creating a detailed game plan that outlined the core functionalities we aimed to implement in the extension's proof of concept. These core features included:

- An input field for user prompts

- Image upload functionality

- Handling API calls to the hosted LLM and processing responses

- Displaying the generated React components as text in a UI element

Alongside these features, we established a timeline with specific delivery dates to keep the project on track.

Once the game plan was set, our next step was to familiarize ourselves with the documentation on how extensions work in VS Code. This research was crucial in helping us understand the necessary APIs and the architecture of a typical extension. With this knowledge in hand, we proceeded to set up a basic extension, similar to a "Hello World" page in web development. This initial setup served as the framework on which we built the entire extension.

Researching the docs

After briefly exploring the documentation, we began searching for and testing the appropriate API that would allow us to create a chat-like view in the primary sidebar of VS Code. To our misfortune, we could not find one that met our needs right away. So, for the first iteration, we settled on creating a chat-like view within the main editor. The goal was to build a basic skeleton in the main editor first, with the plan to eventually move the entire interface to the primary sidebar in the next iteration. In this initial setup, we aimed to create a WebView in the main editor that asks for the description of the component.

For the code generation part, we initially decided on using Claude 3.5 Sonnet or GPT-4o, but realized that spending extra resources on just a proof of concept was unnecessary. Therefore, we moved ahead with codegen-350M-mono from a pool of free/open source LLMs such as StarCoder, CodeGeeX, open-LLAMA, and code-LLAMA, primarily due to its popularity.

The Development Phase

Development was broken down into phases. The first phase produced the first version upon which the rest of the extension was built. All phases followed the game-plans that were created before starting them. What do the game-plans do? They are the official documents that list the features, delivery date, feature assignment, and other technical details for the phase.

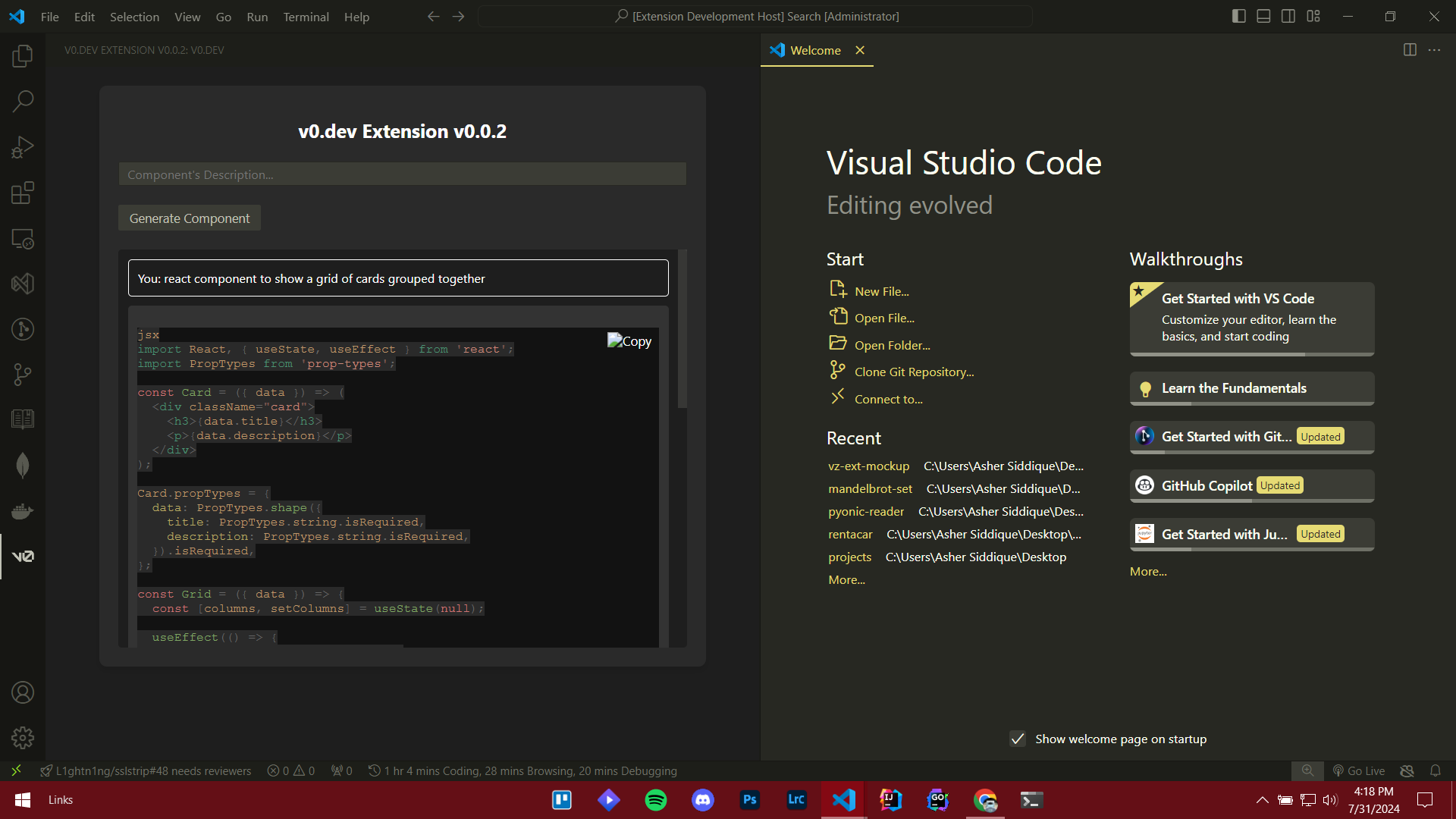

For the first iteration, we kept it simple and only focused on getting the input prompt, calling the LLM through HuggingFace’s Inference API, and displaying the output. This was relatively simple, just a bunch of HTML and CSS, and calling some VS Code Extension API’s endpoints.

For the second iteration, we made some improvements as well as added new features. The improvements included moving the chat panel from the editor window to the primary sidebar window. The first version had an extra step to launch the chat window, which was also removed in the second version so that the process would be straightforward. The feature that was added was a copy button so that the end user can use the generated code in their project.

The third and final iteration involved implementation of syntax highlighting using Shiki syntax highlighter, and using VS Code UI library instead of default CSS styles for a similar look and feel.

The team

We were a team of two and the development of the extension was a collaborative effort, where we divided the work based on our strengths. Aitsam, focused on the front-end development, handling the design and implementation of the user interface, ensuring that the overall extension seemed a part of VS Code in terms of interface.

On the other hand, Asher took charge of the backend development. The responsibilities were managing the API integration, handling the logic for making API calls to the LLM, integrating it with the front-end, and processing the responses. Asher's work ensured that the extension could communicate with the AI model properly.

Navigating the Constraints

The previous team's biggest blocker eventually turned out to be v0.dev's own website. The website didn't allow iframing in the VSCode soon after the initial launch, and the team tried its best to uncover the underlying APIs, but it was a hassle trying to setup Vercel Auth to work alongside the original website. There also wasn't an official REST API.

For our team, the biggest blocker we faced while developing an MVP was the LLM model. What we failed to realize was that codegen-350M-mono was a good autocomplete LLM. What we actually wanted was something that could turn prompts into code. The first version of the MVP was tested out using codegen and the possible improvements were noted down. The search for an LLM led us to Mistral-7B, specifically Instruct-v0.3. Mistral turned out to be quite a good model, as it was able to handle engineered prompts and the output was on point as well.

From the improvements that we noted down, the second biggest blocker that we felt was moving the chat panel from the editor in VS Code to the primary sidebar. This required a whole refactor of the HTML, and not to mention there wasn’t much in the documentation about using WebViews in the primary sidebar. This took a good half a week but we were able to implement it and the extension received a very natural look and feel.

Possible Improvements

One place where the extension could improve massively was the output UI element. Instead of displaying the generated code, we could instead output the render of the code. This would help the end user in quickly determining whether the generated component is correct or does it need to be generated again, all without launching their project again. Our team lead gave us this idea but it never came to fruition due to time constraints.